Monday, March 31, 2008

Offline access to Google Docs

Our team has a real affinity for free-spirited types, and so we spend a lot of time thinking up ways to make Google Docs friendlier even to people on the go. If you're one of those, you already know how you can access your Google Docs from anywhere, how nice it is to avoid having to email yourself files or back up docs with a thumbdrive, and how easily you can collaborate with others.

Of course there was a teeny thing missing: you needed an Internet connection to make Google Docs work for you. Now, for documents, that's no longer true. As you'll read on the Google Docs blog, starting today and over the coming weeks we're rolling out offline editing access to word processing documents to Google Docs users. You no longer need an Internet connection when inspiration strikes. Whether you're working on an airplane or in a cafe, you can automatically access all your docs on your own computer.

To see how offline access works, watch this video:

Friday, March 28, 2008

Privacy made easier

Posted by Jane Horvath, Senior Privacy Counsel, and Peter Fleischer, Global Privacy Counsel

Because we're strongly committed to protecting your privacy, we want to present our privacy practices in the clearest way possible. Over the past year, we've been experimenting with video to clarify and illustrate the privacy practices set forth in our Google Privacy Policy. We've used videos to communicate with you about things like cookies, IP addresses, and logs. (Check out the Google Privacy Channel on YouTube.) And you've told us that the screen shots, whiteboard drawings, and pointers from the engineers and product managers we've captured on video are helping you better understand the fine points of our Privacy Policy.

With that in mind, today we're announcing a revamp of our Privacy Center. The new Center is a one-stop shop for privacy resources, with various multi-media formats aimed to help you further understand how we store and use data, how to control who you share your data with, and how we protect your privacy. We hope this new Center will help you make more informed privacy choices whenever you use Google products and services.

Because we're strongly committed to protecting your privacy, we want to present our privacy practices in the clearest way possible. Over the past year, we've been experimenting with video to clarify and illustrate the privacy practices set forth in our Google Privacy Policy. We've used videos to communicate with you about things like cookies, IP addresses, and logs. (Check out the Google Privacy Channel on YouTube.) And you've told us that the screen shots, whiteboard drawings, and pointers from the engineers and product managers we've captured on video are helping you better understand the fine points of our Privacy Policy.

With that in mind, today we're announcing a revamp of our Privacy Center. The new Center is a one-stop shop for privacy resources, with various multi-media formats aimed to help you further understand how we store and use data, how to control who you share your data with, and how we protect your privacy. We hope this new Center will help you make more informed privacy choices whenever you use Google products and services.

Wednesday, March 26, 2008

Insight into YouTube videos

Posted by Tracy Chan, Product Manager, YouTube

I remember the first time a video I posted to YouTube cracked 100 views. I wasn't so much surprised as curious: Who were these people? How did they find this video? Where did they come from?

Today we're taking our first step towards answering these questions with YouTube Insight, a free tool that enables anyone with a YouTube account -- users, partners, and advertisers -- to view detailed statistics about the videos that they upload. For example, uploaders can see how often their videos are viewed in different geographic regions, as well as how popular they are relative to all videos in that market over a given period of time. You can also delve deeper into the lifecycle of your videos, like how long it takes for a video to become popular, and what happens to video views as popularity peaks. For now, you can find currently available metrics by clicking under the "About this Video" button under My account > Videos, Favorites, Playlists > Manage my Videos.

Insight gives the creators an inside look into the viewing trends of their videos on YouTube, and helps them to increase views and become more popular. Partners can evaluate metrics to better serve and understand their audiences, as well as increase ad revenue. And advertisers can study their metrics and successes to tailor their marketing -- both on and off the site -- and reach the right viewers. As a result, Insight turns YouTube into one of the world's largest focus groups.

There's more about this on the YouTube blog.

I remember the first time a video I posted to YouTube cracked 100 views. I wasn't so much surprised as curious: Who were these people? How did they find this video? Where did they come from?

Today we're taking our first step towards answering these questions with YouTube Insight, a free tool that enables anyone with a YouTube account -- users, partners, and advertisers -- to view detailed statistics about the videos that they upload. For example, uploaders can see how often their videos are viewed in different geographic regions, as well as how popular they are relative to all videos in that market over a given period of time. You can also delve deeper into the lifecycle of your videos, like how long it takes for a video to become popular, and what happens to video views as popularity peaks. For now, you can find currently available metrics by clicking under the "About this Video" button under My account > Videos, Favorites, Playlists > Manage my Videos.

Insight gives the creators an inside look into the viewing trends of their videos on YouTube, and helps them to increase views and become more popular. Partners can evaluate metrics to better serve and understand their audiences, as well as increase ad revenue. And advertisers can study their metrics and successes to tailor their marketing -- both on and off the site -- and reach the right viewers. As a result, Insight turns YouTube into one of the world's largest focus groups.

There's more about this on the YouTube blog.

Today is Document Freedom Day

Posted by Zaheda Bhorat, Open Source Programs Manager

Today, the world is celebrating the first-ever Document Freedom Day. More than 200 teams in 60 countries are spending today raising awareness about document freedom by hosting speakers, events, and literally raising the DFD flag. Through such activities, these teams are committed to spreading the word about the importance of open documents and the workable open standards that ensure your access to your documents now and in the future. We at Google wholeheartedly join the community of users, organisations, businesses, governments and individuals around the world in today's celebration.

Our mission concerning the world's information is well known. Naturally, your access to your information is also important to us. When you save a document, you need to be sure that the information in it will be accessible tomorrow, a month from now, ten years from now. How and where you choose to access your documents shouldn't make a difference. This is what Document Freedom Day is about.

Five years ago, who would have thought that we'd be accessing the documents we created then on our cell phones? And yet today we expect this. The standard by which your document is formatted today absolutely needs to be readable and available to those who design the technology for tomorrow. This is the only way that you will know for sure that the information you entrust to your documents now will be yours for as long as you want it to be.

So wherever you are, join the fun and support your freedom to access your information. Find out more and help to spread the word: Document freedom means freedom of information for all of us, now, later and long, long into the future.

Today, the world is celebrating the first-ever Document Freedom Day. More than 200 teams in 60 countries are spending today raising awareness about document freedom by hosting speakers, events, and literally raising the DFD flag. Through such activities, these teams are committed to spreading the word about the importance of open documents and the workable open standards that ensure your access to your documents now and in the future. We at Google wholeheartedly join the community of users, organisations, businesses, governments and individuals around the world in today's celebration.

Our mission concerning the world's information is well known. Naturally, your access to your information is also important to us. When you save a document, you need to be sure that the information in it will be accessible tomorrow, a month from now, ten years from now. How and where you choose to access your documents shouldn't make a difference. This is what Document Freedom Day is about.

Five years ago, who would have thought that we'd be accessing the documents we created then on our cell phones? And yet today we expect this. The standard by which your document is formatted today absolutely needs to be readable and available to those who design the technology for tomorrow. This is the only way that you will know for sure that the information you entrust to your documents now will be yours for as long as you want it to be.

So wherever you are, join the fun and support your freedom to access your information. Find out more and help to spread the word: Document freedom means freedom of information for all of us, now, later and long, long into the future.

Tuesday, March 25, 2008

Making search better in Catalonia, Estonia, and everywhere else

Posted by Paul Haahr and Steve Baker, Software Engineers, Search Quality

We recently began a series of posts on how we harness the power of data. Earlier we told you how data has been critical to the advancement of search; about using data to make our products safe and to prevent fraud; this post is the newest in the series. -Ed.

One of the most important uses of data at Google is building language models. By analyzing how people use language, we build models that enable us to interpret searches better, offer spelling corrections, understand when alternative forms of words are needed, offer language translation, and even suggest when searching in another language is appropriate.

One place we use these models is to find alternatives for words used in searches. For example, for both English and French users, "GM" often means the company "General Motors," but our language model understands that in French searches like seconde GM, it means "Guerre Mondiale" (World War), whereas in STI GM it means "Génie Mécanique" (Mechanical Engineering). Another meaning in English is "genetically modified," which our language model understands in GM corn. We've learned this based on the documents we've seen on the web and by observing that users will use both "genetically modified" and "GM" in the same set of searches.

We use similar techniques in all languages. For example, if a Catalan user searches for resultat elecció barris BCN (searching for the result of a neighborhood election in Barcelona), Google will also find pages that use the words "resultats" or "eleccions" or that talk about "Barcelona" instead of "BCN." And our language models also tell us that the Estonian user looking for Tartu juuksur, a barber in Tartu, might also be interested in a "juuksurisalong," or "barber shop."

In the past, language models were built from dictionaries by hand. But such systems are incomplete and don't reflect how people actually use language. Because our language models are based on users' interactions with Google, they are more precise and comprehensive -- for example, they incorporate names, idioms, colloquial usage, and newly coined words not often found in dictionaries.

When building our models, we use billions of web documents and as much historical search data as we can, in order to have the most comprehensive understanding of language possible. We analyze how our users searched and how they revised their searches. By looking across the aggregated searches of many users, we can infer the relationships of words to each other.

Queries are not made in isolation -- analyzing a single search in the context of the searches before and after it helps us understand a searcher's intent and make inferences. Also, by analyzing how users modify their searches, we've learned related words, variant grammatical forms, spelling corrections, and the concepts behind users' information needs. (We're able to make these connections between searches using cookie IDs -- small pieces of data stored in visitors' browsers that allow us to distinguish different users. To understand how cookies work, watch this video.)

To provide more relevant search results, Google is constantly developing new techniques for language modeling and building better models. One element in building better language models is using more data collected over longer periods of time. In languages with many documents and users, such as English, our language models allow us to improve results deep into the "long tail" of searches, learning about rare usages. However, for languages with fewer users and fewer documents on the web, building language models can be a challenge. For those languages we need to work with longer periods of data to build our models. For example, it takes more than a year of searches in Catalan to provide a comparable amount of data as a single day of searching in English; for Estonian, more than two and a half years worth of searching is needed to match a day of English. Having longer periods of data enables us to improve search for these less commonly used languages.

At Google, we want to ensure that we can help users everywhere find the things they're looking for; providing accurate, relevant results for searches in all languages worldwide is core to Google's mission. Building extensive models of historical usage in every language we can, especially when there are few users, is an essential piece of making search work for everyone, everywhere.

We recently began a series of posts on how we harness the power of data. Earlier we told you how data has been critical to the advancement of search; about using data to make our products safe and to prevent fraud; this post is the newest in the series. -Ed.

One of the most important uses of data at Google is building language models. By analyzing how people use language, we build models that enable us to interpret searches better, offer spelling corrections, understand when alternative forms of words are needed, offer language translation, and even suggest when searching in another language is appropriate.

One place we use these models is to find alternatives for words used in searches. For example, for both English and French users, "GM" often means the company "General Motors," but our language model understands that in French searches like seconde GM, it means "Guerre Mondiale" (World War), whereas in STI GM it means "Génie Mécanique" (Mechanical Engineering). Another meaning in English is "genetically modified," which our language model understands in GM corn. We've learned this based on the documents we've seen on the web and by observing that users will use both "genetically modified" and "GM" in the same set of searches.

In the past, language models were built from dictionaries by hand. But such systems are incomplete and don't reflect how people actually use language. Because our language models are based on users' interactions with Google, they are more precise and comprehensive -- for example, they incorporate names, idioms, colloquial usage, and newly coined words not often found in dictionaries.

When building our models, we use billions of web documents and as much historical search data as we can, in order to have the most comprehensive understanding of language possible. We analyze how our users searched and how they revised their searches. By looking across the aggregated searches of many users, we can infer the relationships of words to each other.

Queries are not made in isolation -- analyzing a single search in the context of the searches before and after it helps us understand a searcher's intent and make inferences. Also, by analyzing how users modify their searches, we've learned related words, variant grammatical forms, spelling corrections, and the concepts behind users' information needs. (We're able to make these connections between searches using cookie IDs -- small pieces of data stored in visitors' browsers that allow us to distinguish different users. To understand how cookies work, watch this video.)

To provide more relevant search results, Google is constantly developing new techniques for language modeling and building better models. One element in building better language models is using more data collected over longer periods of time. In languages with many documents and users, such as English, our language models allow us to improve results deep into the "long tail" of searches, learning about rare usages. However, for languages with fewer users and fewer documents on the web, building language models can be a challenge. For those languages we need to work with longer periods of data to build our models. For example, it takes more than a year of searches in Catalan to provide a comparable amount of data as a single day of searching in English; for Estonian, more than two and a half years worth of searching is needed to match a day of English. Having longer periods of data enables us to improve search for these less commonly used languages.

A common sense approach to Internet safety

Posted by Elliot Schrage, Vice President of Global Communications and Public Affairs

Over the years, we've built tools and offered resources to help kids and families stay safe online. Our SafeSearch feature, for example, helps filter explicit content from search results.

We've also been involved in a variety of local initiatives to educate families about how to stay safe while surfing the web. Here are a few highlights:

Users can also download our new Online Family Safety Guide (PDF), which includes useful Internet Safety pointers for parents, or check out a quick tutorial on SafeSearch created by one of our partner organizations, GetNetWise.

We all have roles to play in keeping kids safe online. Parents need to be involved with their kids' online lives and teach them how to make smart decisions. And Internet companies like Google need to continue to empower parents and kids with tools and resources that help put them in control of their online experiences and make web surfing safer.

Over the years, we've built tools and offered resources to help kids and families stay safe online. Our SafeSearch feature, for example, helps filter explicit content from search results.

We've also been involved in a variety of local initiatives to educate families about how to stay safe while surfing the web. Here are a few highlights:

- In the U.S., we've worked with Common Sense Media to promote awareness about online safety and have donated hardware and software to improve the ability of the National Center for Missing and Exploited Children to combat child exploitation.

- Google UK has collaborated with child safety organizations such as Beatbullying and Childnet to raise awareness about cyberbullying and share prevention messages, and with law enforcement authorities, including the Child Exploitation and Online Protection Centre, to fight online exploitation.

- Google India initiated "Be NetSmart," an Internet safety campaign created in cooperation with local law enforcement authorities that aims to educate students, parents, and teachers across the country about the great value the Internet can bring to their lives, while also teaching best practices for safe surfing.

- Google France launched child safety education initiatives including Tour de France des Collèges and Cherche Net that are designed to teach kids how to use the Internet responsibly.

- And Google Germany worked with the national government, industry representatives, and a number of local organizations recently to launch a search engine for children.

Users can also download our new Online Family Safety Guide (PDF), which includes useful Internet Safety pointers for parents, or check out a quick tutorial on SafeSearch created by one of our partner organizations, GetNetWise.

We all have roles to play in keeping kids safe online. Parents need to be involved with their kids' online lives and teach them how to make smart decisions. And Internet companies like Google need to continue to empower parents and kids with tools and resources that help put them in control of their online experiences and make web surfing safer.

OpenSocial continues to grow: Welcome, Yahoo!

Posted by Dan Peterson, Product Manager

Last November, OpenSocial was created to help build infrastructure for the social web. OpenSocial provides a common mechanism for developers to easily hook into many different social networks and extend their functionality. Sites including MySpace and orkut have begun to provide OpenSocial applications to their users, and hi5 will be rolling out next week.

Today we're pleased that Yahoo! has announced its support for OpenSocial. We're looking forward to having Yahoo! users join the hundreds of millions of people who will soon enjoy OpenSocial applications. This addition means even more distribution for developers, encourages participation by even more websites, and, most importantly, results in more features for users all across the web.

In addition, Yahoo!, MySpace, and Google are joining with the broader community to create a non-profit foundation to foster the continued open development of OpenSocial. To that end, we've also launched OpenSocial.org, designed to become the main documentation hub and primary source of information about OpenSocial. To learn more, and to get involved, please review the foundation proposal.

With that, welcome, Yahoo! We look forward to growing the social web together.

Last November, OpenSocial was created to help build infrastructure for the social web. OpenSocial provides a common mechanism for developers to easily hook into many different social networks and extend their functionality. Sites including MySpace and orkut have begun to provide OpenSocial applications to their users, and hi5 will be rolling out next week.

Today we're pleased that Yahoo! has announced its support for OpenSocial. We're looking forward to having Yahoo! users join the hundreds of millions of people who will soon enjoy OpenSocial applications. This addition means even more distribution for developers, encourages participation by even more websites, and, most importantly, results in more features for users all across the web.

In addition, Yahoo!, MySpace, and Google are joining with the broader community to create a non-profit foundation to foster the continued open development of OpenSocial. To that end, we've also launched OpenSocial.org, designed to become the main documentation hub and primary source of information about OpenSocial. To learn more, and to get involved, please review the foundation proposal.

With that, welcome, Yahoo! We look forward to growing the social web together.

Thursday, March 20, 2008

The end of the FCC 700 MHz auction

Posted by Richard Whitt, Washington Telecom and Media Counsel, and Joseph Faber, Corporate Counsel

This afternoon the Federal Communications Commission announced the results of its 700 MHz spectrum auction. While the Commission's anti-collusion rules prevent us from saying much at this point, one thing is clear: although Google didn't pick up any spectrum licenses, the auction produced a major victory for American consumers.

We congratulate the winners and look forward to a more open wireless world. As a result of the auction, consumers whose devices use the C-block of spectrum soon will be able to use any wireless device they wish, and download to their devices any applications and content they wish. Consumers soon should begin enjoying new, Internet-like freedom to get the most out of their mobile phones and other wireless devices.

We'll have more to say about the auction in the near future. Stay tuned.

This afternoon the Federal Communications Commission announced the results of its 700 MHz spectrum auction. While the Commission's anti-collusion rules prevent us from saying much at this point, one thing is clear: although Google didn't pick up any spectrum licenses, the auction produced a major victory for American consumers.

We congratulate the winners and look forward to a more open wireless world. As a result of the auction, consumers whose devices use the C-block of spectrum soon will be able to use any wireless device they wish, and download to their devices any applications and content they wish. Consumers soon should begin enjoying new, Internet-like freedom to get the most out of their mobile phones and other wireless devices.

We'll have more to say about the auction in the near future. Stay tuned.

New Google AJAX Language API - Tools for translation and language detection

Posted by Brandon Badger, Product Manager

The main goal of our AJAX APIs team is to provide developers with the tools needed to create the next generation of great web applications. Our 20% goal is world peace. What better way to help further both objectives than to launch a Language API? :)

The API helps developers automatically translate content in their applications. Users on these sites will have an easier time communicating across lingual boundaries.

The Language API provides both translation and language detection. Here's an example of the translation tool in action:

You can play around with the language detection capabilities via this example widget:

For more information on how to use the Language API in your code, please refer to the documentation here.

The main goal of our AJAX APIs team is to provide developers with the tools needed to create the next generation of great web applications. Our 20% goal is world peace. What better way to help further both objectives than to launch a Language API? :)

The API helps developers automatically translate content in their applications. Users on these sites will have an easier time communicating across lingual boundaries.

The Language API provides both translation and language detection. Here's an example of the translation tool in action:

You can play around with the language detection capabilities via this example widget:

For more information on how to use the Language API in your code, please refer to the documentation here.

Wednesday, March 19, 2008

Gadget maker goes global

Posted by Sheridan Kates, Associate Product Manager, iGoogle

Last year we introduced a feature on iGoogle so you can create a personalized gadget with just a few clicks — no programming skills necessary. We've loved hearing all the stories from people who are enjoying this feature, such as one couple who live thousands of miles apart and use the GoogleGram gadget to send daily love notes. We've also heard from a lot of people who use the Framed Photo gadget to share the latest family snaps with relatives in far-off countries.

Here's my photo gadget from my travels in Europe and the Middle East last year:

Well, today you can do this in all 42 languages for which we support iGoogle. We're looking forward to seeing even more stories like these roll in from across the globe.

We hope you enjoy building and sharing your own gadgets, wherever in the world you might be.

Last year we introduced a feature on iGoogle so you can create a personalized gadget with just a few clicks — no programming skills necessary. We've loved hearing all the stories from people who are enjoying this feature, such as one couple who live thousands of miles apart and use the GoogleGram gadget to send daily love notes. We've also heard from a lot of people who use the Framed Photo gadget to share the latest family snaps with relatives in far-off countries.

Here's my photo gadget from my travels in Europe and the Middle East last year:

Well, today you can do this in all 42 languages for which we support iGoogle. We're looking forward to seeing even more stories like these roll in from across the globe.

We hope you enjoy building and sharing your own gadgets, wherever in the world you might be.

An appreciation of Arthur C. Clarke

Posted by Vint Cerf, Chief Internet Evangelist, and Bill Coughran, Senior VP, Engineering

"Any sufficiently advanced technology is indistinguishable from magic." (Clarke's Third Law)

How do you summarize a man like Arthur C. Clarke? The 90-year-old futurist and science fiction writer, who described himself as a "serial processor", died yesterday in Sri Lanka, his long-time home. Among the authors of the Golden Age of the genre in the 1950s, Clarke is a giant whose creative ideas have found purchase in the real world -- most notably the notion of a synchronous communication satellite, which he envisioned in 1945, but which did not become a reality for 20 more years.

Clarke's The Deep Range (1957) painted a world economy that harvested the bounty of the sea and incorporated humans adapted to that environment. In his earlier works, there is a strong scientific element that lends credibility to the worlds he envisioned. His more recent work has added more deeply philosophical themes. Clarke is probably best known for his book and co-authorship with Stanley Kubrick of the screenplay for the epochal 2001: A Space Odyssey and the sequels to that cultural milestone -- but his two most compelling contributions may be the ability to envision worlds and societies based on premises other than our own, and his dramatic and effective advocacy of science and technology.

He has not squandered celebrity, but used his iconic status to draw public attention to things of global importance. We owe him gratitude not only for his remarkable talent for cerebral entertainment, but also his exceptional ability to make us think. Especially noteworthy now is this 9-minute video, which he prepared on his 90th birthday last December -- as usual, rich with forward-thinking ideas.

Not a few Googlers are who they are today because his work has been a source of inspiration and aspiration. We take a tiny bit of pride in the fact that Google is a "sufficiently advanced technology" that will make it easy for millions of people to find him.

Perhaps the most fitting summary of his life, paraphrasing the famous Vulcan greeting, is that he lived long and prospered! May his views continue to inspire for eons.

"Any sufficiently advanced technology is indistinguishable from magic." (Clarke's Third Law)

How do you summarize a man like Arthur C. Clarke? The 90-year-old futurist and science fiction writer, who described himself as a "serial processor", died yesterday in Sri Lanka, his long-time home. Among the authors of the Golden Age of the genre in the 1950s, Clarke is a giant whose creative ideas have found purchase in the real world -- most notably the notion of a synchronous communication satellite, which he envisioned in 1945, but which did not become a reality for 20 more years.

Clarke's The Deep Range (1957) painted a world economy that harvested the bounty of the sea and incorporated humans adapted to that environment. In his earlier works, there is a strong scientific element that lends credibility to the worlds he envisioned. His more recent work has added more deeply philosophical themes. Clarke is probably best known for his book and co-authorship with Stanley Kubrick of the screenplay for the epochal 2001: A Space Odyssey and the sequels to that cultural milestone -- but his two most compelling contributions may be the ability to envision worlds and societies based on premises other than our own, and his dramatic and effective advocacy of science and technology.

He has not squandered celebrity, but used his iconic status to draw public attention to things of global importance. We owe him gratitude not only for his remarkable talent for cerebral entertainment, but also his exceptional ability to make us think. Especially noteworthy now is this 9-minute video, which he prepared on his 90th birthday last December -- as usual, rich with forward-thinking ideas.

Not a few Googlers are who they are today because his work has been a source of inspiration and aspiration. We take a tiny bit of pride in the fact that Google is a "sufficiently advanced technology" that will make it easy for millions of people to find him.

Perhaps the most fitting summary of his life, paraphrasing the famous Vulcan greeting, is that he lived long and prospered! May his views continue to inspire for eons.

Opening Google Docs to users and developers via Gadgets and Visualization API

Posted by Jonathan Rochelle & Nir Bar-Lev, Product Managers

Whenever we're asked "how do people use Google spreadsheets?", we always struggle with where to start. It's not that we can't think of examples, it's just that the examples are all so different, so unique. Sure, there are definitely favorite themes -- sports, finance and, yes, knitting -- but then the examples become so particular to the people and groups who are using them: The beer taster's results. The nursery school class schedule. The biker's riding log. The family reunion plan. The ski-trip sign-up form. Endless examples, all of which, to spreadsheet junkies like us, are interesting.

But while we've always wanted to give people more options to view and use their information in Google Docs, we knew that trying to build all of these one at a time would simply serve too few people, given all the different ways people use and share spreadsheets.

So today we're starting a new path to better enable developers to customize and build on top of Google Docs with two new tools we are releasing today: Gadgets-in-Docs and the Visualization API.

Instead of delivering just one or two new types of reports, or a new visual map mashup (can you ever get enough of those?), we decided to deliver a platform on which anyone, not just Google, could build the next best thing. We even invited a few developers to try this with us, and they join us in this launch by featuring just a few of their creations, like Panorama's pivot table, or Viewpath's Gantt Chart, or InfoSoft's Funnel Charts -- all great tools for the student and enterprise user alike. We also built a few early gadgets ourselves which you might find useful.

We borrowed the Gadgets-in-Docs concept from the iGoogle team, so it's only fitting that you can also publish your spreadsheet gadgets to iGoogle, where you can see your data-based-Gadget right next to all that other stuff that's important to you (even if it is just a picture of your dog).

To try it out, go into Google Docs and open up a spreadsheet. Click on the chart icon, and click 'Gadget...'. Pick your gadget, customize it to fit your data, and then publish it out to iGoogle or to any webpage.

If you're a developer and want to reach millions of people with your latest creation, check out the Google Visualization API, courtesy of our visualization team engineers. The Visualization API provides a platform that can be used to create, share and reuse visualizations written by the developer community. It provides a common way (an API) to access structured data sources, the first being Google spreadsheets.

Whenever we're asked "how do people use Google spreadsheets?", we always struggle with where to start. It's not that we can't think of examples, it's just that the examples are all so different, so unique. Sure, there are definitely favorite themes -- sports, finance and, yes, knitting -- but then the examples become so particular to the people and groups who are using them: The beer taster's results. The nursery school class schedule. The biker's riding log. The family reunion plan. The ski-trip sign-up form. Endless examples, all of which, to spreadsheet junkies like us, are interesting.

But while we've always wanted to give people more options to view and use their information in Google Docs, we knew that trying to build all of these one at a time would simply serve too few people, given all the different ways people use and share spreadsheets.

So today we're starting a new path to better enable developers to customize and build on top of Google Docs with two new tools we are releasing today: Gadgets-in-Docs and the Visualization API.

Instead of delivering just one or two new types of reports, or a new visual map mashup (can you ever get enough of those?), we decided to deliver a platform on which anyone, not just Google, could build the next best thing. We even invited a few developers to try this with us, and they join us in this launch by featuring just a few of their creations, like Panorama's pivot table, or Viewpath's Gantt Chart, or InfoSoft's Funnel Charts -- all great tools for the student and enterprise user alike. We also built a few early gadgets ourselves which you might find useful.

We borrowed the Gadgets-in-Docs concept from the iGoogle team, so it's only fitting that you can also publish your spreadsheet gadgets to iGoogle, where you can see your data-based-Gadget right next to all that other stuff that's important to you (even if it is just a picture of your dog).

To try it out, go into Google Docs and open up a spreadsheet. Click on the chart icon, and click 'Gadget...'. Pick your gadget, customize it to fit your data, and then publish it out to iGoogle or to any webpage.

If you're a developer and want to reach millions of people with your latest creation, check out the Google Visualization API, courtesy of our visualization team engineers. The Visualization API provides a platform that can be used to create, share and reuse visualizations written by the developer community. It provides a common way (an API) to access structured data sources, the first being Google spreadsheets.

Tuesday, March 18, 2008

Add your business to Local Search in India

Posted by Ravi Yadavilli, Geo Content Operations Manager, and Alok Goel, Product Manager

- Do you own a restaurant in Delhi and wish more people knew about your delicacies?

- Are you an insurance agent seeking new clientele?

- Would you like more tourists for your travel agency in Goa?

- Are you a custom apparel merchandiser in Bangalore seeking to expand your customer base?

Every day several lakhs of people search the Internet seeking firms like yours. It will help you to be listed in Google local search. It's really simple to add your business, and it's free. Call us at 1-800-419-4444 (in India only, for your Indian-based business), SMS "register" to 09900800000, or just add your listing.

Spending a few minutes on this can make all the difference to your business - why not do it now?

- Do you own a restaurant in Delhi and wish more people knew about your delicacies?

- Are you an insurance agent seeking new clientele?

- Would you like more tourists for your travel agency in Goa?

- Are you a custom apparel merchandiser in Bangalore seeking to expand your customer base?

Every day several lakhs of people search the Internet seeking firms like yours. It will help you to be listed in Google local search. It's really simple to add your business, and it's free. Call us at 1-800-419-4444 (in India only, for your Indian-based business), SMS "register" to 09900800000, or just add your listing.

Spending a few minutes on this can make all the difference to your business - why not do it now?

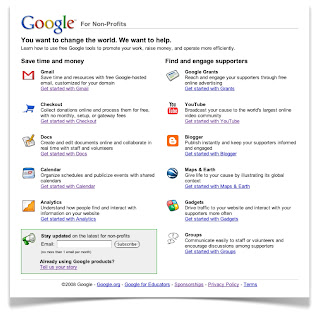

Google for Non-Profits

Posted by Chris Busselle, Investments Manager, Google.org

Many of you spend your days making this world a better place, and we want to do our part to help. Today, we're excited to launch Google For Non-Profits, a one-stop shop for tools to help advance your organization's mission in a smart, cost-efficient way.

This site features ideas and tutorials for how you can use Google tools to promote your work, raise money and operate more efficiently. And to get inspired, you'll also find examples of innovative ways other non-profits are using our products to further their causes. Here are some of the ideas covered:

This site features ideas and tutorials for how you can use Google tools to promote your work, raise money and operate more efficiently. And to get inspired, you'll also find examples of innovative ways other non-profits are using our products to further their causes. Here are some of the ideas covered:

Many of you spend your days making this world a better place, and we want to do our part to help. Today, we're excited to launch Google For Non-Profits, a one-stop shop for tools to help advance your organization's mission in a smart, cost-efficient way.

This site features ideas and tutorials for how you can use Google tools to promote your work, raise money and operate more efficiently. And to get inspired, you'll also find examples of innovative ways other non-profits are using our products to further their causes. Here are some of the ideas covered:

This site features ideas and tutorials for how you can use Google tools to promote your work, raise money and operate more efficiently. And to get inspired, you'll also find examples of innovative ways other non-profits are using our products to further their causes. Here are some of the ideas covered:- When you're writing a grant application, don't get stuck emailing drafts back and forth. Try Google Docs to collaborate on documents with your colleagues.

- Cut costs and save time with Google-hosted email at your own domain. Access your e-mail from any computer with an Internet connection.

- Accept online donations without hassle and with no transaction fees until 2009 with Google Checkout.

- Apply for free online advertising through our Google Grants program to raise awareness and drive traffic to your website.

- Start a blog to keep your supporters informed and engaged.

Using data to help prevent fraud

Posted by Shuman Ghosemajumder, Business Product Manager for Trust & Safety

We recently began a series of posts on how we harness the power of data. Earlier we told you how data has been critical to the advancement of search technology. Then we shared how we use log data to help make Google products safer for users. This post is the newest in the series. -Ed.

Protecting our advertisers against click fraud is a lot like solving a crime: the more clues we have, the better we can determine which clicks to mark as invalid, so advertisers are not charged for them.

As we've mentioned before, our Ad Traffic Quality team built, and is constantly adding to, our three-stage system for detecting invalid clicks. The three stages are: (1) proactive real-time filters, (2) proactive offline analysis, and (3) reactive investigations.

So how do we use logs information for click fraud detection? Our logs are where we get the clues for the detective work. Logs provide us with the repository of data which are used to detect patterns, anomalous behavior, and other signals indicative of click fraud.

Millions of users click on AdWords ads every day. Every single one of those clicks -- and the even more numerous impressions associated with them -- is analyzed by our filters (stage 1), which operate in real-time. This stage certainly utilizes our logs data, but it is stages 2 and 3 which rely even more heavily on deeper analysis of the data in our logs. For example, in stage 2, our team pores over the millions of impressions and clicks -- as well as conversions -- over a longer time period. In combing through all this information, our team is looking for unusual behavior in hundreds of different data points.

IP addresses of computers clicking on ads are very useful data points. A simple use of IP addresses is determining the source location for traffic. That is, for a given publisher or advertiser, where are their clicks coming from? Are they all coming from one country or city? Is that normal for an ad of this type? Although we don't use this information to identify individuals, we look at these in aggregate and study patterns. This information is imperfect, but by analyzing a large volume of this data it is very helpful in helping to prevent fraud. For example, examining an IP address usually tells us which ISP that person is using. It is easy for people on most home Internet connections to get a new IP address by simply rebooting their DSL or cable modem. However, that new IP address will still be registered to their ISP, so additional ad clicks from that machine will still have something in common. Seeing an abnormally high number of clicks on a single publisher from the same ISP isn't necessarily proof of fraud, but it does look suspicious and raises a flag for us to investigate. Other information contained in our logs, such as the browser type and operating system of machines associated with ad clicks, are analyzed in similar ways.

These data points are just a few examples of hundreds of different factors we take into account in click fraud detection. Without this information, and enough of it to identify fraud attempted over a longer time period, it would be extremely difficult to detect invalid clicks with a high degree of confidence, and proactively create filters that help optimize advertiser ROI. Of course, we don't need this information forever; last year we started anonymizing server logs after 18 months. As always, our goal is to balance the utility of this information (as we try to improve Google’s services for you) with the best privacy practices for our users.

If you want to learn more about how we collect information to better detect click fraud, visit our Ad Traffic Quality Resource Center.

We recently began a series of posts on how we harness the power of data. Earlier we told you how data has been critical to the advancement of search technology. Then we shared how we use log data to help make Google products safer for users. This post is the newest in the series. -Ed.

Protecting our advertisers against click fraud is a lot like solving a crime: the more clues we have, the better we can determine which clicks to mark as invalid, so advertisers are not charged for them.

As we've mentioned before, our Ad Traffic Quality team built, and is constantly adding to, our three-stage system for detecting invalid clicks. The three stages are: (1) proactive real-time filters, (2) proactive offline analysis, and (3) reactive investigations.

So how do we use logs information for click fraud detection? Our logs are where we get the clues for the detective work. Logs provide us with the repository of data which are used to detect patterns, anomalous behavior, and other signals indicative of click fraud.

Millions of users click on AdWords ads every day. Every single one of those clicks -- and the even more numerous impressions associated with them -- is analyzed by our filters (stage 1), which operate in real-time. This stage certainly utilizes our logs data, but it is stages 2 and 3 which rely even more heavily on deeper analysis of the data in our logs. For example, in stage 2, our team pores over the millions of impressions and clicks -- as well as conversions -- over a longer time period. In combing through all this information, our team is looking for unusual behavior in hundreds of different data points.

IP addresses of computers clicking on ads are very useful data points. A simple use of IP addresses is determining the source location for traffic. That is, for a given publisher or advertiser, where are their clicks coming from? Are they all coming from one country or city? Is that normal for an ad of this type? Although we don't use this information to identify individuals, we look at these in aggregate and study patterns. This information is imperfect, but by analyzing a large volume of this data it is very helpful in helping to prevent fraud. For example, examining an IP address usually tells us which ISP that person is using. It is easy for people on most home Internet connections to get a new IP address by simply rebooting their DSL or cable modem. However, that new IP address will still be registered to their ISP, so additional ad clicks from that machine will still have something in common. Seeing an abnormally high number of clicks on a single publisher from the same ISP isn't necessarily proof of fraud, but it does look suspicious and raises a flag for us to investigate. Other information contained in our logs, such as the browser type and operating system of machines associated with ad clicks, are analyzed in similar ways.

These data points are just a few examples of hundreds of different factors we take into account in click fraud detection. Without this information, and enough of it to identify fraud attempted over a longer time period, it would be extremely difficult to detect invalid clicks with a high degree of confidence, and proactively create filters that help optimize advertiser ROI. Of course, we don't need this information forever; last year we started anonymizing server logs after 18 months. As always, our goal is to balance the utility of this information (as we try to improve Google’s services for you) with the best privacy practices for our users.

If you want to learn more about how we collect information to better detect click fraud, visit our Ad Traffic Quality Resource Center.

Monday, March 17, 2008

Vote for the YouTube Awards

Posted by Mia Quagliarello, YouTube Team

We're thrilled to say that the second annual YouTube Awards are now live and ready for your vote. This year we've added five new categories: Short Film, Political, Sports, Eyewitness, and Instructional, for a total of 12. So head over to our YouTube Video Awards channel to start watching and voting for some of the videos that defined '07.

You can vote once per day (though you may not change your vote on the same day) and you have until 11:59pm PT this coming Wednesday, March 19th, to do so. We'll announce the winners on Friday, March 21st, at which point we'll feature them on this page and invite them to a special YouTube gathering later this year.

There are more details on the YouTube Blog. Have fun voting!

We're thrilled to say that the second annual YouTube Awards are now live and ready for your vote. This year we've added five new categories: Short Film, Political, Sports, Eyewitness, and Instructional, for a total of 12. So head over to our YouTube Video Awards channel to start watching and voting for some of the videos that defined '07.

You can vote once per day (though you may not change your vote on the same day) and you have until 11:59pm PT this coming Wednesday, March 19th, to do so. We'll announce the winners on Friday, March 21st, at which point we'll feature them on this page and invite them to a special YouTube gathering later this year.

There are more details on the YouTube Blog. Have fun voting!

Thursday, March 13, 2008

Keeping up with Google Apps

Posted by Jeremy Milo, Marketing Manager

During 2007, we introduced 40 significant improvements to Google Apps, which is our suite of communication, collaboration, and security apps for businesses, schools, and organizations. Feedback from many of you, and even our own employees, inspired these changes. And there are many more in store -- the count this year is already up to 11.

Since we introduced Google Apps a little more than a year ago, many of these features have been of particular benefit to businesses, like tools to migrate mail from an old email system to Google Apps; integration of Postini's security and compliance services; support for IMAP in Gmail; syncing tools for Google Calendar; support for dozens of new languages; new mobile access options; and just weeks ago, Google Sites.

We're able to release these sorts of things quickly because all of our apps live "in the cloud." That means updates are continuous: you get improvements without having to wait for new versions, as you do with traditional software. Of course, it may also mean that IT administrators get a little dizzy from the pace of these changes.

The latest improvement will help technology departments and those of you who already use Google Apps keep up with every change, no matter how often they appear: Now we're documenting all updates in an RSS feed, so you can get notified as they happen.

To subscribe to new updates and see details about past improvements, click this "Add to Google" button:

If there's something you'd like to see in Google Apps, let us know. Feedback like yours shapes its future development.

During 2007, we introduced 40 significant improvements to Google Apps, which is our suite of communication, collaboration, and security apps for businesses, schools, and organizations. Feedback from many of you, and even our own employees, inspired these changes. And there are many more in store -- the count this year is already up to 11.

Since we introduced Google Apps a little more than a year ago, many of these features have been of particular benefit to businesses, like tools to migrate mail from an old email system to Google Apps; integration of Postini's security and compliance services; support for IMAP in Gmail; syncing tools for Google Calendar; support for dozens of new languages; new mobile access options; and just weeks ago, Google Sites.

We're able to release these sorts of things quickly because all of our apps live "in the cloud." That means updates are continuous: you get improvements without having to wait for new versions, as you do with traditional software. Of course, it may also mean that IT administrators get a little dizzy from the pace of these changes.

The latest improvement will help technology departments and those of you who already use Google Apps keep up with every change, no matter how often they appear: Now we're documenting all updates in an RSS feed, so you can get notified as they happen.

To subscribe to new updates and see details about past improvements, click this "Add to Google" button:

If there's something you'd like to see in Google Apps, let us know. Feedback like yours shapes its future development.

Space Jam

Posted by Lior Ron, Product Manager

Code Jam is one of our most famous traditions. Programmers compete to hack and solve complex programming challenges in a very short time, and the winners are awarded fame, cash prizes and the opportunity to intern at Google.

Diego Gavinowich from Buenos Aires was a finalist in our Latin America Code Jam, and joined us for a winter internship three months ago (missing summer break in Argentina!). Since he loves astronomy and web hacking, we gave Diego a new Code Jam challenge: code a web version of Google Sky by the end of his time with us.

Well, he did it... with the help of other engineers jamming along on their 20% time. We're very pleased to tell you that Google Sky is now available on the web at sky.google.com. You can search for planets, listen to Earth & Sky podcasts, watch some beautiful Hubble telescope images, or explore historical maps of the sky from the comfort of your browser.

Sky in Google Earth, which launched last August, was originally available to our 350 million Google Earth users. This release brings the universe to every browser and makes Sky accessible to just about anyone with an Internet connection — from school children to professional astronomers — in 26 different languages.

To learn more about Google Sky web edition, watch this short video, and read the full story on the Google Lat Long blog.

We'll miss you, Diego!

Code Jam is one of our most famous traditions. Programmers compete to hack and solve complex programming challenges in a very short time, and the winners are awarded fame, cash prizes and the opportunity to intern at Google.

Diego Gavinowich from Buenos Aires was a finalist in our Latin America Code Jam, and joined us for a winter internship three months ago (missing summer break in Argentina!). Since he loves astronomy and web hacking, we gave Diego a new Code Jam challenge: code a web version of Google Sky by the end of his time with us.

Well, he did it... with the help of other engineers jamming along on their 20% time. We're very pleased to tell you that Google Sky is now available on the web at sky.google.com. You can search for planets, listen to Earth & Sky podcasts, watch some beautiful Hubble telescope images, or explore historical maps of the sky from the comfort of your browser.

Sky in Google Earth, which launched last August, was originally available to our 350 million Google Earth users. This release brings the universe to every browser and makes Sky accessible to just about anyone with an Internet connection — from school children to professional astronomers — in 26 different languages.

To learn more about Google Sky web edition, watch this short video, and read the full story on the Google Lat Long blog.

We'll miss you, Diego!

Using log data to help keep you safe

Posted by Niels Provos, Google Security Team

We recently began two new series of posts. The first, which explains how we harness data for our users, started with this post. The second, focusing on how we secure information and how users can protect themselves online, began here. This post is the second installment in both series.- Ed.

We sometimes get questions on what Google does with server log data, which registers how users are interacting with our services. We take great care in protecting this data, and while we've talked previously about some of the ways it can be useful, something we haven't covered yet are the ways it can help us make Google products safer for our users.

While the Internet on the whole is a safe place, and most of us will never fall victim to an attack, there are more than a few threats out there, and we do everything we can to help you stay a step ahead of them. Any information we can gather on how attacks are launched and propagated helps us do so.

That's where server log data comes in. We analyze logs for anomalies or other clues that might suggest malware or phishing attacks in our search results, attacks on our products and services, and other threats to our users. And because we have a reasonably significant data sample, with logs stretching back several months, we're able to perform aggregate, long-term analyses that can uncover new security threats, provide greater understanding of how previous threats impacted our users, and help us ensure that our threat detection and prevention measures are properly tuned.

We can't share too much detail (we need to be careful not to provide too many clues on what we look for), but we can use historical examples to give you a better idea of how this kind of data can be useful. One good example is the Santy search worm (PDF), which first appeared in late 2004. Santy used combinations of search terms on Google to identify and then infect vulnerable web servers. Once a web server was infected, it became part of a botnet and started searching Google for more vulnerable servers. Spreading in this way, Santy quickly infected thousands and thousands of web servers across the Internet.

As soon as Google recognized the attack, we began developing a series of tools to automatically generate "regular expressions" that could identify potential Santy queries and then block them from accessing Google.com or flag them for further attention. But because regular expressions like these can sometimes snag legitimate user queries too, we designed the tools so they'd test new expressions in our server log databases first, in order to determine how each one would affect actual user queries. If it turned out that a regular expression affected too many legitimate user queries, the tools would automatically adjust the expression, analyze its performance against the log data again, and then repeat the process as many times as necessary.

In this instance, having access to a good sample of log data meant we were able to refine one of our automated security processes, and the result was a more effective resolution of the problem. In other instances, the data has proven useful in minimizing certain security threats, or in preventing others completely. In the end, what this means is that whenever you use Google search, or Google Apps, or any of our other services, your interactions with those products helps us learn more about security threats that could impact your online experience. And the better the data we have, the more effectively we can protect all our users.

We recently began two new series of posts. The first, which explains how we harness data for our users, started with this post. The second, focusing on how we secure information and how users can protect themselves online, began here. This post is the second installment in both series.- Ed.

We sometimes get questions on what Google does with server log data, which registers how users are interacting with our services. We take great care in protecting this data, and while we've talked previously about some of the ways it can be useful, something we haven't covered yet are the ways it can help us make Google products safer for our users.

While the Internet on the whole is a safe place, and most of us will never fall victim to an attack, there are more than a few threats out there, and we do everything we can to help you stay a step ahead of them. Any information we can gather on how attacks are launched and propagated helps us do so.

That's where server log data comes in. We analyze logs for anomalies or other clues that might suggest malware or phishing attacks in our search results, attacks on our products and services, and other threats to our users. And because we have a reasonably significant data sample, with logs stretching back several months, we're able to perform aggregate, long-term analyses that can uncover new security threats, provide greater understanding of how previous threats impacted our users, and help us ensure that our threat detection and prevention measures are properly tuned.

We can't share too much detail (we need to be careful not to provide too many clues on what we look for), but we can use historical examples to give you a better idea of how this kind of data can be useful. One good example is the Santy search worm (PDF), which first appeared in late 2004. Santy used combinations of search terms on Google to identify and then infect vulnerable web servers. Once a web server was infected, it became part of a botnet and started searching Google for more vulnerable servers. Spreading in this way, Santy quickly infected thousands and thousands of web servers across the Internet.

As soon as Google recognized the attack, we began developing a series of tools to automatically generate "regular expressions" that could identify potential Santy queries and then block them from accessing Google.com or flag them for further attention. But because regular expressions like these can sometimes snag legitimate user queries too, we designed the tools so they'd test new expressions in our server log databases first, in order to determine how each one would affect actual user queries. If it turned out that a regular expression affected too many legitimate user queries, the tools would automatically adjust the expression, analyze its performance against the log data again, and then repeat the process as many times as necessary.

In this instance, having access to a good sample of log data meant we were able to refine one of our automated security processes, and the result was a more effective resolution of the problem. In other instances, the data has proven useful in minimizing certain security threats, or in preventing others completely. In the end, what this means is that whenever you use Google search, or Google Apps, or any of our other services, your interactions with those products helps us learn more about security threats that could impact your online experience. And the better the data we have, the more effectively we can protect all our users.

Book info where you need it, when you need it

Posted by Frances Haugen, Associate Product Manager and Matthew Gray, Software Engineer, Book Search

Here at Google Book Search we love books. To share this love of books (and the tremendous amount of information we've accumulated about them), today we've released a new API that lets you link easily to any of our books. Web developers can use the Books Viewability API to quickly find out a book's viewability on Google Book Search and, in an automated fashion, embed a link to that book in Google Book Search on their own sites.

As an example of the API in use, check out the Deschutes Public Library in Oregon, which has added a link to "Preview this book at Google" next to the listings in their library catalog. This enables Deschutes readers to preview a book immediately via Google Book Search so that they can then make a better decision about whether they'd like to buy the book, borrow it from a library or whether this book wasn't really the book they were looking for.

We think this API will be useful to all sites that connect readers with information about books, from library catalogs to public libraries to universities. To see more implementations in action, read this post on the Book Search blog.

Here at Google Book Search we love books. To share this love of books (and the tremendous amount of information we've accumulated about them), today we've released a new API that lets you link easily to any of our books. Web developers can use the Books Viewability API to quickly find out a book's viewability on Google Book Search and, in an automated fashion, embed a link to that book in Google Book Search on their own sites.

As an example of the API in use, check out the Deschutes Public Library in Oregon, which has added a link to "Preview this book at Google" next to the listings in their library catalog. This enables Deschutes readers to preview a book immediately via Google Book Search so that they can then make a better decision about whether they'd like to buy the book, borrow it from a library or whether this book wasn't really the book they were looking for.

We think this API will be useful to all sites that connect readers with information about books, from library catalogs to public libraries to universities. To see more implementations in action, read this post on the Book Search blog.

Our solutions for ad serving

Posted by Rohit Dhawan, Senior Product Manager

Earlier this week, we completed our acquisition of DoubleClick. Together, we're now focused on building a full suite of products and tools that help publishers of all sizes improve productivity, manage their inventory, generate additional revenue opportunities and save time so they can focus on what they do best -- creating great content and delivering an exceptional experience to their users.

First, let us address the options that publishers have when it comes to selling and managing ad space on their websites. Some publishers use ad networks like Google AdSense to fill their ad space. Still others employ a direct sales force to manage and sell their ad inventory with solutions like the DoubleClick Revenue Center, and partner with third-party ad networks to fill in any unsold space. Regardless, it is a challenge for publishers to effectively manage their available inventory and ensure all of their clients' campaigns serve on time without a sophisticated ad management and ad serving solution.

Today, we're announcing a new tool for publishers with the beta launch of Google Ad Manager. Directed at addressing the ad management and serving needs of publishers with smaller sales teams, Google Ad Manager is a free, hosted ad and inventory management tool that can help publishers sell, schedule, deliver and measure their directly-sold and network-based ad inventory. It offers an intuitive and simple user experience with Google speed and a tagging process so publishers can spend more time working with their advertisers and less time on their ad management solution. And by providing detailed inventory forecasts and tracking at a very granular level, Ad Manager helps publishers maximize their inventory sell-through rates.

Google Ad Manager effectively complements the DoubleClick Revenue Center, which is focused on publishers with larger sales teams. We're excited to add DART for Publishers to our suite of products, and we're committed to the continued development and enhancement of DoubleClick's offerings. Today's announcement demonstrates this promise, and at the same time furthers our goal of creating new opportunities for publishers of all sizes. Dozens of publishers have been using Google Ad Manager successfully in early trials. To hear what those publishers have to say check out some Ad Manager success stories or take a product tour to learn more.

As we are still in beta, Google Ad Manager is available to publishers by invitation only. If you're interested in learning more about it or would like to be considered for the program, visit the Google Ad Manager site. Existing DoubleClick customers are not affected by this announcement. As we expand the Google Ad Manager beta program, we will be in touch again to include additional publishers and offer updates on our progress.

Earlier this week, we completed our acquisition of DoubleClick. Together, we're now focused on building a full suite of products and tools that help publishers of all sizes improve productivity, manage their inventory, generate additional revenue opportunities and save time so they can focus on what they do best -- creating great content and delivering an exceptional experience to their users.

First, let us address the options that publishers have when it comes to selling and managing ad space on their websites. Some publishers use ad networks like Google AdSense to fill their ad space. Still others employ a direct sales force to manage and sell their ad inventory with solutions like the DoubleClick Revenue Center, and partner with third-party ad networks to fill in any unsold space. Regardless, it is a challenge for publishers to effectively manage their available inventory and ensure all of their clients' campaigns serve on time without a sophisticated ad management and ad serving solution.

Today, we're announcing a new tool for publishers with the beta launch of Google Ad Manager. Directed at addressing the ad management and serving needs of publishers with smaller sales teams, Google Ad Manager is a free, hosted ad and inventory management tool that can help publishers sell, schedule, deliver and measure their directly-sold and network-based ad inventory. It offers an intuitive and simple user experience with Google speed and a tagging process so publishers can spend more time working with their advertisers and less time on their ad management solution. And by providing detailed inventory forecasts and tracking at a very granular level, Ad Manager helps publishers maximize their inventory sell-through rates.

Google Ad Manager effectively complements the DoubleClick Revenue Center, which is focused on publishers with larger sales teams. We're excited to add DART for Publishers to our suite of products, and we're committed to the continued development and enhancement of DoubleClick's offerings. Today's announcement demonstrates this promise, and at the same time furthers our goal of creating new opportunities for publishers of all sizes. Dozens of publishers have been using Google Ad Manager successfully in early trials. To hear what those publishers have to say check out some Ad Manager success stories or take a product tour to learn more.

As we are still in beta, Google Ad Manager is available to publishers by invitation only. If you're interested in learning more about it or would like to be considered for the program, visit the Google Ad Manager site. Existing DoubleClick customers are not affected by this announcement. As we expand the Google Ad Manager beta program, we will be in touch again to include additional publishers and offer updates on our progress.

Wednesday, March 12, 2008

The most wonderful time of the year

Posted by Ben Lewis, Product Manager

... for fans of college basketball, that is. We're only days away from March Madness, and the question is: who's going dancing? Will a Cinderella team pull the upset or will we see a number one seed team as the champion again? These questions and more will be answered over the next few weeks as we watch one of the greatest tournaments in American sports unfold.

I'd like to invite you to join the madness of this year's NCAA tournament. Some of our engineer-sports fans and I have developed the Basketball Bracket Battle gadget for iGoogle to help you track your picks as the tournament progresses. Use it to compete against your friends and coworkers in a pool, or compete with other Bracket Battle gadget users. You'll be able to make your picks and track your progress without ever leaving your homepage.

You can pick your bracket and invite friends to your pool starting on Selection Sunday (March 16th), but feel free to add the gadget to your page anytime starting today. And the madness officially begins on March 20th, so make sure you're all set by then. Come join us for the battle and add the Basketball Bracket Battle to your iGoogle.

We'll see you at the dance!

... for fans of college basketball, that is. We're only days away from March Madness, and the question is: who's going dancing? Will a Cinderella team pull the upset or will we see a number one seed team as the champion again? These questions and more will be answered over the next few weeks as we watch one of the greatest tournaments in American sports unfold.

I'd like to invite you to join the madness of this year's NCAA tournament. Some of our engineer-sports fans and I have developed the Basketball Bracket Battle gadget for iGoogle to help you track your picks as the tournament progresses. Use it to compete against your friends and coworkers in a pool, or compete with other Bracket Battle gadget users. You'll be able to make your picks and track your progress without ever leaving your homepage.

You can pick your bracket and invite friends to your pool starting on Selection Sunday (March 16th), but feel free to add the gadget to your page anytime starting today. And the madness officially begins on March 20th, so make sure you're all set by then. Come join us for the battle and add the Basketball Bracket Battle to your iGoogle.

We'll see you at the dance!

ARIA For Google Reader: In praise of timely information access

Posted by T.V. Raman, Research Scientist

From time to time, our own T.V. Raman shares his tips on how to use Google from his perspective as a technologist who cannot see -- tips that sighted people, among others, may also find useful.

The advent of RSS and ATOM feeds, and the creation of tools like Google Reader for efficiently consuming content feeds, has vastly increased the amount of information we access every day. From the perspective of someone who cannot see, content feeds are one of the major innovations of the century. They give me direct access to the actual content without first having to dig through a lot of boilerplate visual layout as happens with websites. In addition, all of this content is now available from a single page with a consistent interface.

Until now, I've enjoyed the benefits of Google Reader using a custom client. Today, we're happy to tell you that the "mainstream" Google Reader now works with off-the-shelf screenreaders, as well as Fire Vox, the self-voicing extension to Firefox. This brings the benefits of content feeds and feed readers to the vast majority of visually impaired users.

Google Reader has always had complete keyboard support. With the accessibility enhancements we've added, all user actions now produce the relevant spoken feedback via the user's adaptive technology of choice. This feedback is generated using Accessible Rich Internet Applications (WAI-ARIA), an evolving standard for enhancing the accessibility of Web-2.0 applications. WAI-ARIA is supported at present by Firefox -- with future support forthcoming in other browsers. This is one of the primary advantages of building on open standards.

We originally prototyped these features in Google Reader using the AxsJAX framework. After extensive testing of these enhancements, we've now integrated these into the mainstream product. See the related post on the Google Reader Blog for additional technical details.

Looking forward to a better informed future for all!

From time to time, our own T.V. Raman shares his tips on how to use Google from his perspective as a technologist who cannot see -- tips that sighted people, among others, may also find useful.

The advent of RSS and ATOM feeds, and the creation of tools like Google Reader for efficiently consuming content feeds, has vastly increased the amount of information we access every day. From the perspective of someone who cannot see, content feeds are one of the major innovations of the century. They give me direct access to the actual content without first having to dig through a lot of boilerplate visual layout as happens with websites. In addition, all of this content is now available from a single page with a consistent interface.

Until now, I've enjoyed the benefits of Google Reader using a custom client. Today, we're happy to tell you that the "mainstream" Google Reader now works with off-the-shelf screenreaders, as well as Fire Vox, the self-voicing extension to Firefox. This brings the benefits of content feeds and feed readers to the vast majority of visually impaired users.